Photo by John Barkiple on Unsplash

Managing connections between Power Platform environments using Azure DevOps pipelines

For organizations that are using Application Lifecycle Management (ALM) to deploy and maintain their Power Platform solutions, connections can be especially tricky for many reasons, such as personnel turnover, different permissions in different environments, as well as many others. In this article, I hope to help you navigate these scenarios by providing you with a clear mental model for how connections work in an ALM solution. In this article, we'll cover:

Relationship between connectors, connections, and connection references.

Special considerations for service accounts.

How to declare and maintain connections and connection references across multiple Power Platform environments.

Connectors, connections, and connection references

To understand how to manage connections across multiple Power Platform environments using ALM, it's necessary to first understand what connectors, connections, and connection references are, and how they are related.

Connector: A built-in or custom component that surfaces some (or sometimes all) of a REST API's available endpoints, with information on what parameters can be provided, as well as what information will be returned. Example connectors include Dataverse, Office 365 Users, and SharePoint.

Connection: A set of credentials used to authenticate to a specific service. For example, if you create a Power Automate Flow and manually connect it to Dataverse, Power Platform stores information (such as an authentication token received from the API) so that you don't need to authenticate every time the Flow runs.

Connection reference: In Power Platform solutions (not applicable to Flows created outside of a solution), this component references a connection that exists within that environment. This is the key to managing connections in ALM, as it allows the same connection reference to point to a different connection in each environment. For example, you might have a connection reference called "Dataverse connection reference" that points to your personal connection in the Dev environment, but points to your service account's connection in the Test and Prod environments.

Service account considerations

In a perfect world, we would all find our perfect team and no one would ever leave. In the real world, however, personnel turnover happens. This causes serious issues when the person leaving the team is using their credentials to connect to the various services that are used by the team's Flows and Apps. But the team still needs to securely connect to those services, so what are we supposed to do? One solution for this is "service accounts", which are actually just user accounts created in Microsoft Entra ID that aren't associated with a specific person. The benefit to using a service account instead of a standard user account is that, aside from regular password changes and any conditional access policies (such as requiring on-prem access) that are specific to service accounts, there is no action required when the team changes.

That's great! So, we can just log in as the service account and connect each of our Flows and Apps to their respective connectors using this service account, right? Unfortunately, not. There's one other hidden step that must be completed in order for the connection references to use these connections - each connection created by a service account must be shared with the service principal (not to be confused with the service account) that is listed as the owner for the deployed Flows and Apps in that environment. We'll go over this in greater detail in the next section, but I felt it necessary to specifically call out this "gotcha" as it can lead to many difficult-to-debug issues.

Declaring and maintaining connections across multiple environments

For the purposes of this discussion, we'll start with an existing Azure DevOps Repo with the following folder structure:

DemoRepo/

-- DemoSolution/

-- azure-pipelines.yml

This repo deploys a Power Platform solution named DemoSolution using Azure DevOps Pipelines. The deployment steps are defined in the following azure-pipelines.yml file:

trigger:

- main

pool:

vmImage: windows-latest

stages:

- stage: Deploy_To_Test

jobs:

- deployment: DeployToTest

displayName: 'Build, check, and deploy PP solution to test environment'

environment: 'test'

strategy:

runOnce:

deploy:

steps:

- task: PowerPlatformToolInstaller@2

displayName: 'Install PP tools required for later steps'

inputs:

DefaultVersion: true

- task: PowerPlatformPackSolution@2

displayName: 'Pack unpacked, managed solution'

inputs:

SolutionSourceFolder: '$(System.DefaultWorkingDirectory)\DemoSolution'

SolutionOutputFile: '$(Build.ArtifactStagingDirectory)\DemoSolution.zip'

SolutionType: 'Managed'

- task: PowerPlatformChecker@2

displayName: 'Check PP solution for issues'

inputs:

authenticationType: 'PowerPlatformSPN'

PowerPlatformSPN: 'Test Environment Service Connection Name'

FilesToAnalyze: '$(Build.ArtifactStagingDirectory)\DemoSolution.zip'

RuleSet: '0ad12346-e108-40b8-a956-9a8f95ea18c9' # Default ruleset

- task: PowerPlatformImportSolution@2

displayName: 'Import PP solution into target environment'

inputs:

authenticationType: 'PowerPlatformSPN'

PowerPlatformSPN: 'Test Environment Service Connection Name'

SolutionInputFile: '$(Build.ArtifactStagingDirectory)\DemoSolution.zip'

AsyncOperation: true

MaxAsyncWaitTime: '60'

- stage: Deploy_To_Prod

jobs:

- deployment: DeployToProd

displayName: 'Build, check, and deploy PP solution to prod environment'

environment: 'prod'

strategy:

runOnce:

deploy:

steps:

# Repeat test environment steps using Prod environment service connection

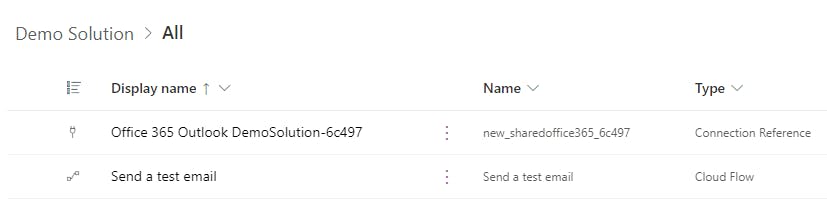

With the azure-pipelines.yml file defined above, we're successfully deploying the DemoSolution into our "Test" and "Prod" environments. Let's look at our DemoSolution components to get an idea of what exactly we're deploying:

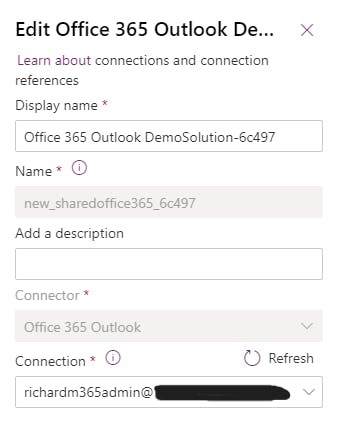

As you can see, we have a Power Automate Flow that uses Outlook to send an email, as well as a connection reference that connects the Power Automate Flow to the Office 365 Outlook connection. We can see this if we open the connection reference.

For our Development environment, it's fine that the connection reference is using my individual account, but let's explore how we can configure our solution to use a different connection in our Test and Prod environments.

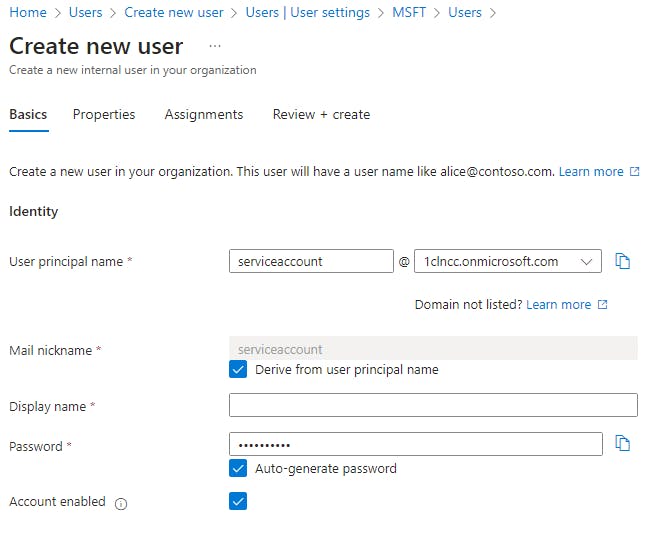

Create service account

Since a service account is just a standard Microsoft Entra ID user account that isn't associated with a person (i.e., doesn't use MFA), the steps for creating one will be determined by your organization's Entra ID admins and are therefore likely to be different than the steps shown here. That being said, while logged in as a user with the ability to create a new user, go to Create new user - Microsoft Entra admin center to create a new service account.

All of the default options are fine, so you can select Review + create once you've provided a User principal name and a Display name for your service account. Make sure you save the password for later.

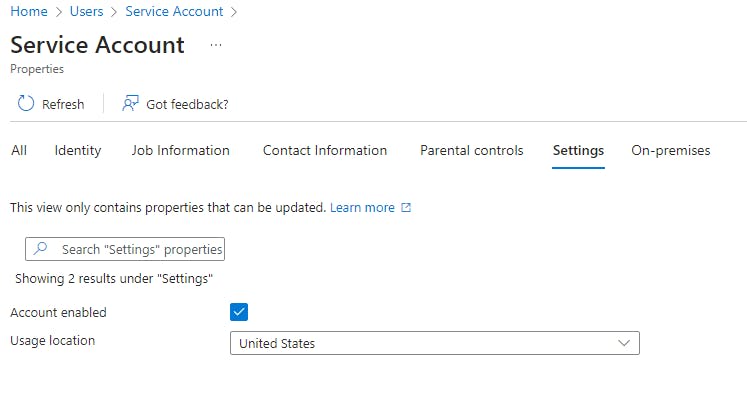

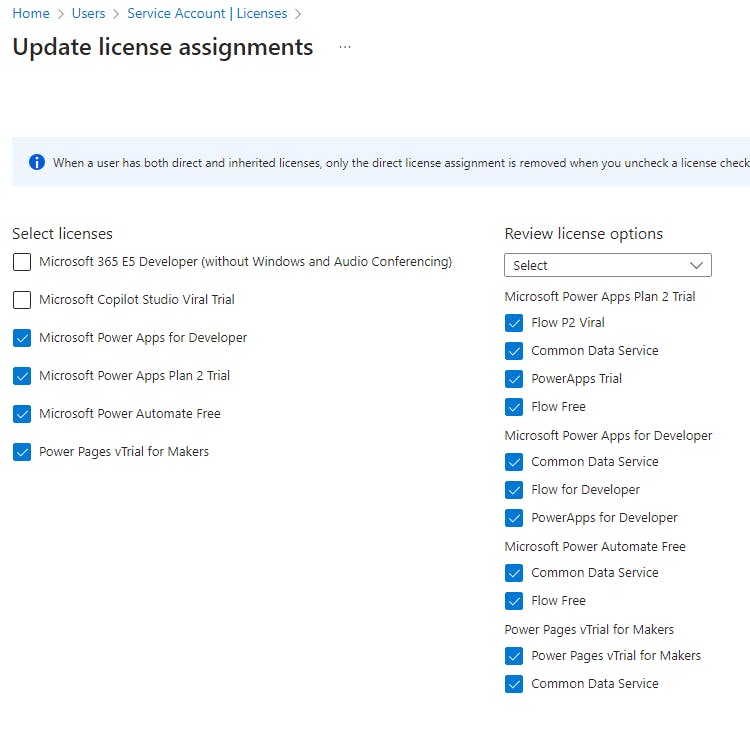

Once you've created the service account, you'll need to assign the usage location, which can be done by selecting the service account, selecting Edit properties, go to Settings tab, and select the country for the service account's usage location. Then, you'll be able to assign the necessary Power Platform licenses to this service account.

Add service account to target environment(s)

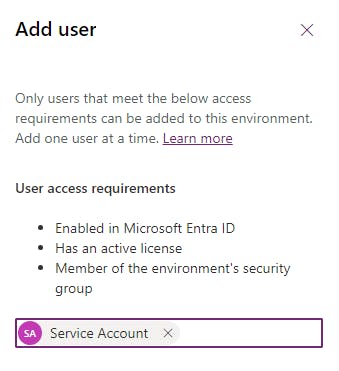

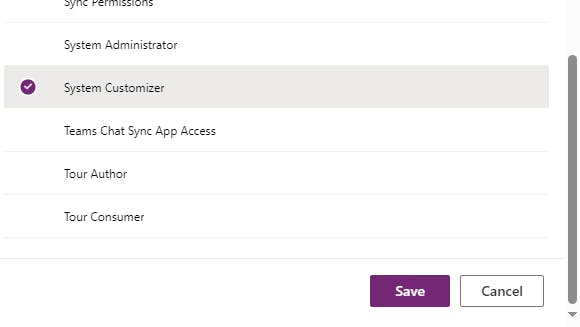

Now that you've assigned the necessary licenses to your service account, for each of your target deployment environments, follow the steps to Add users to an environment that has a Dataverse database to add your service account. Make sure to assign the service account at least the System Customizer role so that it can manage the connections.

Create connections in target environment

Great! Our service account has now been added into each of our target Power Platform environments. Next, we need to create each of the connections that will be used by our Flows and Apps. Here are the steps:

Log into make.powerapps.com as the service account. Be sure to use separate browser window to avoid connection automatically using browser profile credentials. Update your password if prompted. If prompted to set up MFA, you can either dismiss it if you're just using it for this article or, if you plan on using this service account for production operations, discuss with your Entra ID admin for options to disable the MFA requirement for service accounts.

Select the target Power Platform environment from the list of environments in the top-right corner.

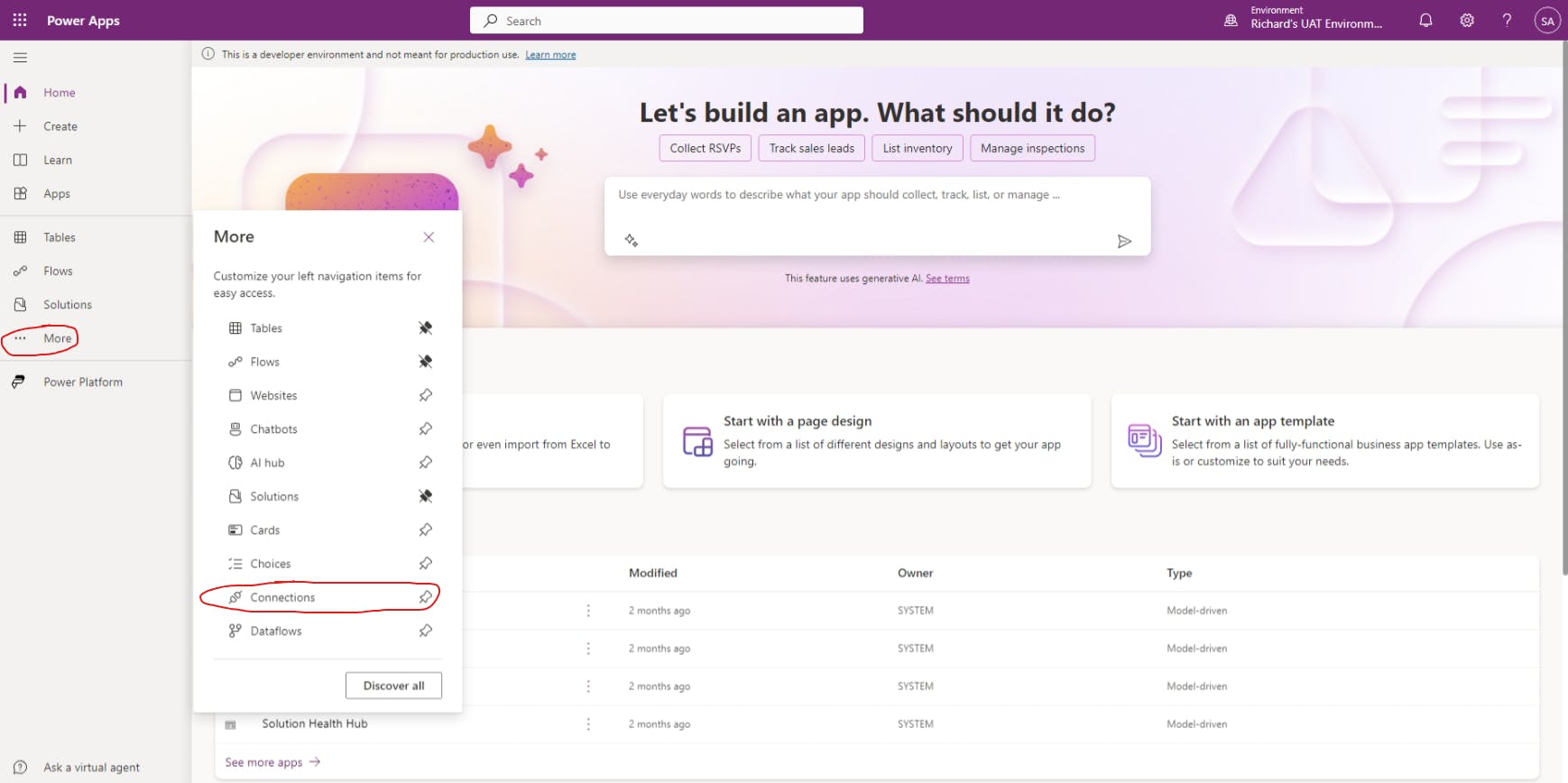

Select More from the left-side navigation menu, and then select Connections.

Select either + New connection or Create a connection.

Search for and select the Office 365 Outlook connection.

- Hit Create and authenticate using the service account.

- Repeat for additional target environments and connections needed.

Share connections

Now that you've created the necessary connections in your target environment using your service account, you need to share this with the service principal that is used by your Azure DevOps pipeline to deploy the Power Platform solution into each environment. Here are the steps:

From the list of connections you've created, select a connection.

Select Share.

Search for and select the service principal. If you aren't sure which service principal, you can get the service principal's application ID from the Service connections section of the Azure DevOps Project settings and then search for it that way.

Save.

Repeat for additional environments and connections.

Add connection info to repo

With our connections created using our service account, and shared with our service principal, we're now ready to map our connection references to use these connections.

At root of project, create

deployment-settingsfolderWithin

deployment-settingsfolder, create folder for each target environment, using target environment name as folder name. For example, if you have a target environment with a URL oftestenvironment.crm.dynamics.com, you would name this foldertestenvironment.Within the target environment folder, create a JSON file named

[SolutionName].jsonwhereSolutionNameis the logical name of your solution, not the display name (i.e. without spaces).Within the JSON file, add the following:

{

"EnvironmentVariables": [

],

"ConnectionReferences": [

]

}

- Within the

ConnectionReferencesarray, add an object containing theLogicalNameof the connection reference, theConnectionIdof the connection created using your service account, and theConnectorIdof the type of connection being used. For example, if you have a connection reference with the nameMy Solution Dataverse Connectionwhich has a logical name ofmypublisher_MySolutionDataverseConnectionthat is using a Dataverse connection in the target environment, your connection reference object might look like this:

{

"LogicalName": "mypublisher_MySolutionDataverseConnection",

"ConnectionId": "shared-commondataser-XXXXXXXX-XXXX-XXXX-XXXX-XXXXXXXXXXXX",

"ConnectorId": "/providers/Microsoft.PowerApps/apis/shared_commondataserviceforapps"

}

ConnectionId and ConnectorId, select the connection from within make.powerapps.com and copy it from the URL, which is in the format: https://make.powerapps.com/environments/[EnvironmentId]/connections/[ConnectorId]/[ConnectionId]/details#- Commit deployment settings to repo (e.g.

git commit -am "Adds deployment settings for [SolutionName]").

deployment-settings folder and a folder for each environment, when we could have just named our JSON file to include the environment name and the solution name. The reason for this is scalability. Now that you have the folders, adding another solution means just adding the respective JSON files into the environment folders.Update deployment pipeline

Great work! Not only have you created and shared your service account's connections, but you've gone through the tedious process of mapping those connections to the connection references used in your solution. We're finally at the last step of this configuration, which is to update our Azure DevOps pipeline to use our deployment settings file when deploying the solution, like so:

# ... existing deployment steps

- task: PowerPlatformImportSolution@2

displayName: 'Import PP solution into target environment'

inputs:

authenticationType: 'PowerPlatformSPN'

PowerPlatformSPN: 'Test Environment Service Connection Name'

SolutionInputFile: '$(Build.ArtifactStagingDirectory)\DemoSolution.zip'

UseDeploymentSettingsFile: true

DeploymentSettingsFile: 'deployment-settings\$(TargetEnvironmentName)\DemoSolution.json'

AsyncOperation: true

MaxAsyncWaitTime: '60'

# ... continue existing deployment steps

The key changes to our solution import step of the pipeline are:

We added a

UseDeploymentSettingsFileinput with a value oftrueso the Power Platform task knows to use it.We add a

DeploymentSettingsFileinput and specified the location of our solution- and environment-specific deployment settings JSON file, so the Power Platform task knows which file to use.

Now that we've made these changes, let's commit them to the repo (e.g. git commit -am "Adds deployment pipeline").

Conclusion

You now have a way to connect all of your Apps and Flows to the connections they use, without relying on a team member's credentials, reliably. From here, you can also add in all of the environment variables that you use in each solution into the deployment settings JSON files.

Thanks for reading and, if you found this article useful, please consider sharing it across your network so others may be able to benefit from this information.

Take care!